What Really Happens When We Outsource Critical Thinking?

By Emma Zande

Fall 2025 Intern

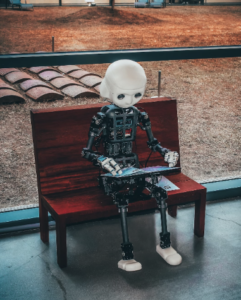

Artificial Intelligence (AI) is officially in the classroom, whether we’re ready or not. Students are using it to crank out study guides and outlines. Educators are using it to give quicker feedback and spark new lesson ideas. And everyone’s hoping it will finally cut through the busywork that’s been draining our time for decades. But before we hand over more of the learning process to AI, we need to ask a bigger question: What does “saving time” actually cost us? Does cutting the busywork sharpen our learning, or does it shortcut it? If we want to make smart choices about AI in education, we need a clear understanding of how it actually shapes our learning.

Does AI Hinder Critical Thinking?

AI can certainly help save time, but the time it saves is the time that would otherwise be spent brainstorming, planning, and evaluating information—time which helps students build their critical thinking skills. And unfortunately, there are side effects to using AI to cut out this busywork. Studies have indicated that when individuals rely heavily on AI for information retrieval and decision making, their ability to engage in critical thinking—reflective problem solving and independent analysis—may decline for a variety of reasons:

Cognitive Offloading: When students rely too heavily on a calculator, their mental math skills decline. Similarly, when students rely on AI to complete complex cognitive processes, their ability to think analytically and solve problems independently declines.

Reduced Analytical Skills: AI has a tendency to reduce complex problems to quick answers, which allows students to bypass the cognitive difficulty required for deep critical analysis. When they don’t grapple with nuance, students never get the chance to develop sophisticated analytical skills.

Increased Susceptibility to Errors: AI synthesizes huge amounts of information, but it’s not always accurate, and it can confidently present false or distorted content (also known as “hallucinations”). When students rely on AI as a source of truth, they’re far more likely to introduce errors into their work.

Diminished Motivation: Critical thinking follows a simple rule: use it or lose it. When students routinely lean on AI to make decisions, their motivation to think independently can weaken over time.

What’s the Difference Between Students and Educators Using AI?

With AI entering education in so many ways, it is important to understand the differences between AI use for students and educators. Both students and educators experience cognitive offloading when using AI to do the tasks they’d normally do themselves, and both parties can experience a decrease in their critical thinking skills as a result. But students are still developing those skills. They’re not just at risk of weakening their critical thinking; they’re at risk of never fully developing it. They haven’t yet built the knowledge base necessary to use AI as a supplement rather than a substitute.

Educators, on the other hand, face a different challenge: setting boundaries on AI usage. When educators use AI but restrict students from doing the same, it can create resentment. For high school and college-aged students especially, teachers should explain how and why they use AI, and why student use is limited. Clear expectations can help build a foundation of trust, and model to students what responsible decision-making around AI use can look like.

AI will keep getting faster, smarter, and more accessible. But human thinking won’t—unless we actively protect it. As educators, parents, and leaders, we have a responsibility to ensure AI supports learning without replacing the struggle, reflection, and deep engagement that actually builds knowledge. Before we cut out the busywork, we need to be certain we’re not cutting out the learning too.

Publishing Solutions Group

At Publishing Solutions Group, we recognize that the rapid integration of Artificial Intelligence (AI) in education is both a powerful opportunity and a significant responsibility. We stay informed on emerging research, practical applications, and evolving conversations about AI’s benefits and risks—from enhancing productivity to potentially impacting critical thinking and equity. Our team is dedicated to understanding not only what AI can do, but what it should do in educational settings.

We believe there is no one-size-fits-all approach to AI. Each school, district, and educator has unique goals, challenges, and values. That’s why we work closely with our clients to develop tailored strategies, resources, and supports that align with their specific needs—whether that means integrating AI tools responsibly, crafting effective AI policies, or designing professional development that helps educators harness AI without undermining student learning.

Our commitment is to help you navigate this changing landscape with clarity and confidence. From evaluating AI-driven content to supporting meaningful digital literacy, PSG is here to ensure that technology serves your educational mission—never replacing the human insight, critical thinking, and relationships at the heart of learning.

Photo by Andrea De Santis for Unsplash